Apple announced that a slew of new accessibility updates are coming to iOS 17, including a new feature that lets you FaceTime your loved ones without uttering a single syllable. How? You’ll have the option to type words into the FaceTime app, which will, in turn, “speak out” the text to the person on the receiving end of the call.

And that’s just the beginning. The Cupertino-based tech giant revealed a couple of other accessibility features that are slated to roll out to supported iPhones by the end of this year.

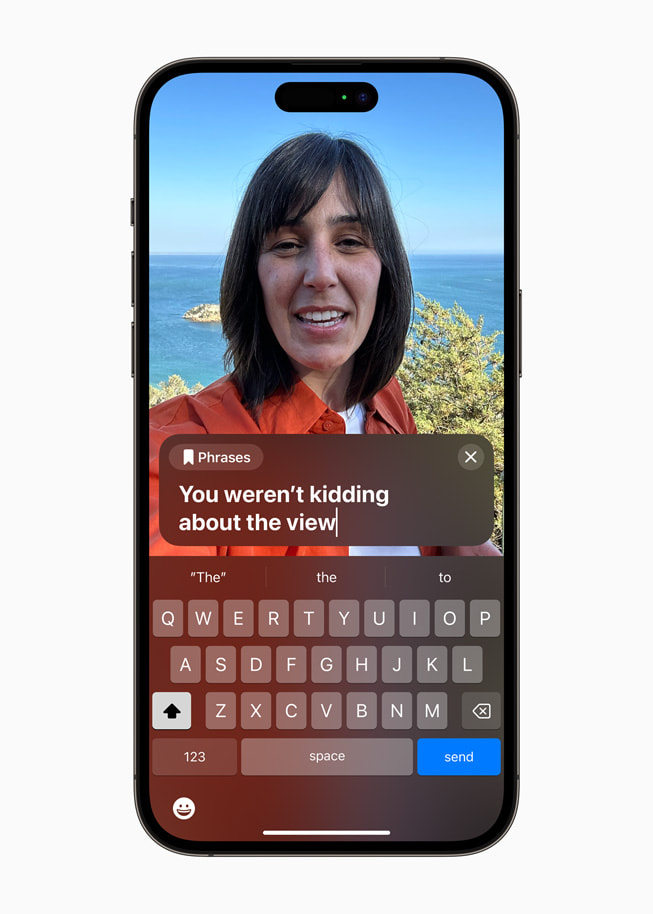

iOS 17 Live Speech

As mentioned, you can type what you’d like to say to your loved ones on FaceTime with an iOS 17 feature called Live Speech. This perk not only lets a voice speak for you during FaceTime, but you can invoke it during phone and in-person conversations, too.

Users will also have access to commonly used phrases, which will facilitate a more seamless conversation with friends, family, and colleagues. This accessibility feature is designed to help nonspeaking users, but it can also be useful to those who’ve temporarily lost their voice (e.g., illness or injuring one’s vocal cords during a jam session).

Live Speech will be available on iPhone, iPad, and Mac.

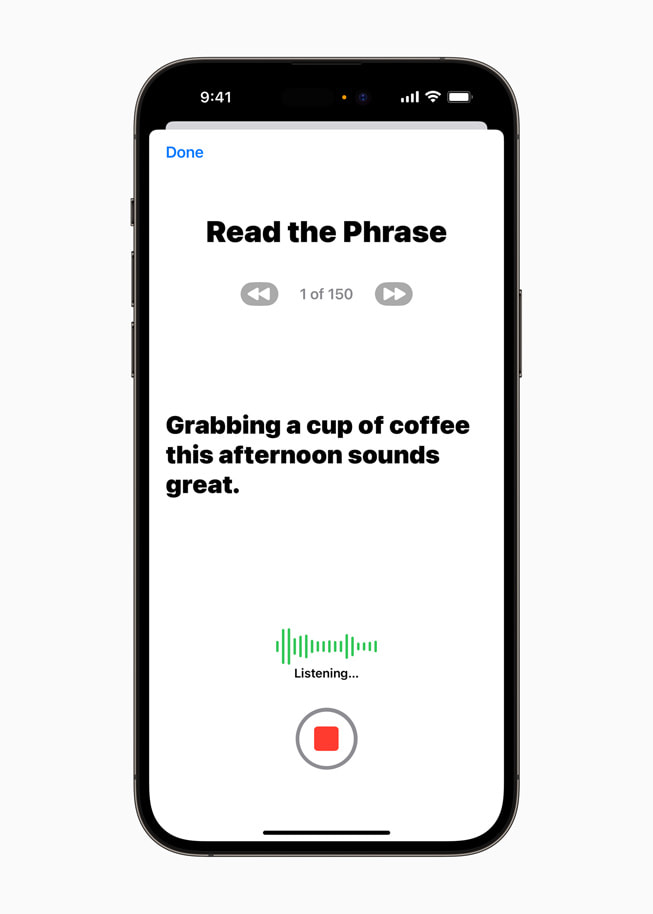

iOS 17 Personal Voice

If you don’t want a robovoice to speak on your behalf, don’t worry. AI is here to save the day. Apple is rolling out a new feature called Personal Voice that lets you create a voice that imitates the way you sound.

You can do this by reading a randomized set of text prompts to record 15 minutes of audio (this can be done on an iPhone or iPad). Once you’ve completed it, Personal Voice seamlessly integrates with Live Speech so you can use a voice replica to connect with loved ones.

Personal Voice takes advantages of the machine-learning capabilities provided by your iPhone and iPad.

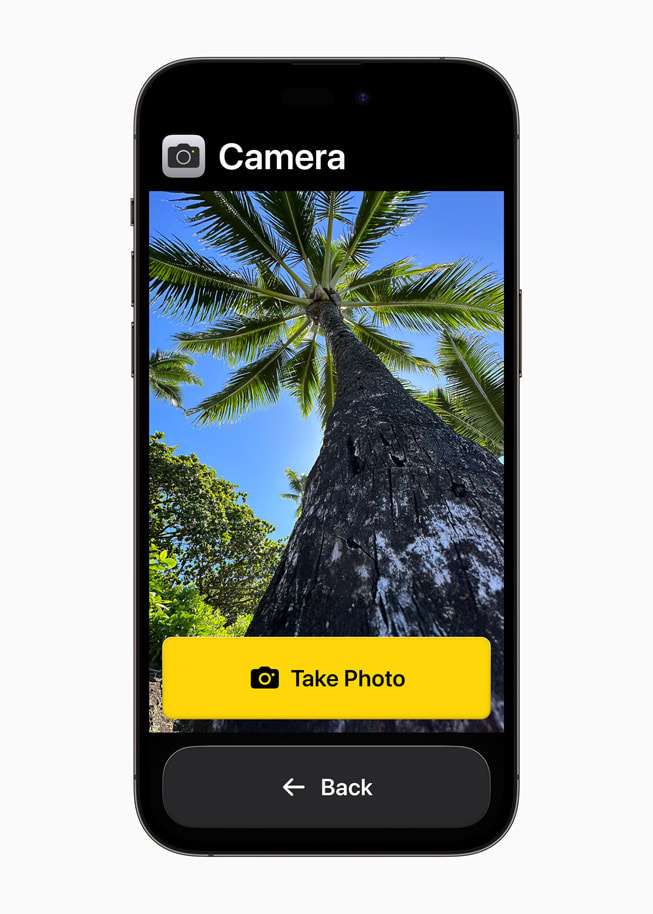

iOS 17 Assistive Access

Assistive Access breaks down apps into their essentials, which benefit users with cognitive disabilities. For example, with Assistive Access, the Camera app is transformed into its bare essentials, which helps lighten the cognitive load. There’s just a viewfinder, a “Take photo” button and a “Back” button — that’s it.

Another example is the Messages app, which comes with an emoji-only keyboard in Assistive Access mode. Plus, for easy access. there’s a button that gives users the option to record a video message to share with others. On top of that, the home screen can be laid on in a grid-based or row-based format, making it easier on users’ eyes.

Apple says Assistive Access was built with feedback from users with cognitive disabilities, ensuring that iDevices keep the essential core elements of iPhone and iPad without overwhelming users with stimulus overload.

iOS 17 Detection Mode in Magnifier

Users who are blind or have low vision can utilize a new feature called Detection Mode, which lives in Magnifier. With this new feature, users can turn on the Camera app, tap on Magnifier, and point the viewfinder at real-world physical objects with text. The device will then read out the labels to the user.

For example, if you’re having trouble reading what’s labeled on your microwave, you can point your Camera app to the text and it will read it out loud for you. Apple says that this is made possible by the on-device machine learning and the LiDAR scanner on the iPhone and iPad.

These features are expected to arrive in iOS 17, alongside the new iPhone 15 series, later this year.

Source link

notebook.co.id informasi dan review notebook laptop tablet dan pc

notebook.co.id informasi dan review notebook laptop tablet dan pc