Artificial Intelligence — it’s brilliant, isn’t it? I love AI so much I want ChatGPT to adopt me and tell me it’s proud. That’s the kind of innocent wholesome experience I want with AI, anyway. Unlike most of the disturbing things your average rotter would get up to if given the chance.

And that’s the problem, they have had the chance. In fact, at this very minute, beyond the surface layer applications of AI being used in software like Midjourney or Meta MusicGen to make us all feel like Pablo Picasso or Rick Rubin, there are nefarious forces at play doing all sorts of wrong with the same tech that powers even Microsoft’s lovable AI chatbot, Bing Chat.

So readers, let’s hop aboard the Good Ship Laptop and sail our way down a river of doom and gloom, chowing down on black pills as if they were Skittles along the way. Let’s do away with the glamor and gloss that is our supposed co-existence with AI.

Instead, we should face up to how likely it is that our digital deities aren’t here to carve out a human utopia, but a putrid dystopia of crime, violence, blackmail, surveillance, and profiling as we slog our way through the wanton misery that is the most disturbing ways AI is currently being used.

1. Omni-present surveillance

We fundamentally misunderstand the limits of the Large Language Models (LLMs) we are currently interacting with.

According to texperts/techsperts Tristan Harris and Aza Raskin of the Center for Humane Technology, if there was any hope that we as a species would view the book Nineteen Eighty-Four as a warning and not an instruction manual it likely just died.

The pair spoke earlier in the year about how we fundamentally misunderstand the limits of the Large Language Models (LLMs) we are currently interacting with in software like Google Bard or ChatGPT. We presume that by language we mean human language, when in reality, to a computer, everything is a language. This has allowed researchers to train an AI on brain scan images and have the AI begin to loosely decode the thoughts in our head with impressive accuracy.

A future where privacy is no longer an option. Potentially, not even within your own head.

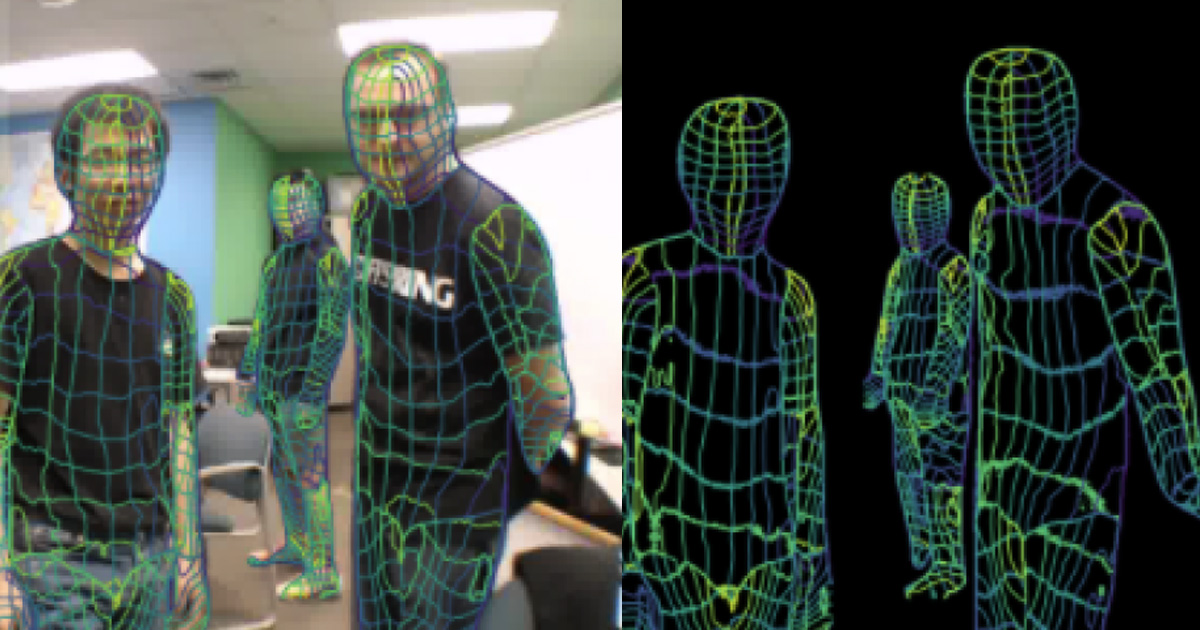

Another example saw researchers use an LLM to monitor the radio signals that exist all around us. The AI was trained using two cameras in stereoscopic alignment, with one monitoring a room with people in it and the other monitoring the radio signals within. After researchers removed the traditional camera, the second camera was able to accurately recreate live events in the room just by analyzing the radio signals.

Newsflash, AI has now been able to incorporate wall hacks into real life. All of which points to a future where privacy is no longer an option. Potentially, not even within your own head.

2. Lethal autonomous weapons systems (or, Terminators)

The world’s militaries have been doing their darndest to turn real-life warfare into some sort of Call of Duty kill streak simulator

War. Huh, yeah. What is it good for? War used to be all about noble knights on steeds and windmilling into your enemies while wielding a sword and steel slate. It still involves slates, but now it’s the Android tablet variety, attached to an Xbox Wireless Controller by some cheaply crafted plastic mount and guiding tomahawk missiles into people’s homes from 5,000 miles away. Chivalry is indeed dead.

Of course, even though the world’s militaries have been doing their darndest to turn real-life warfare into some sort of Call of Duty kill streak simulator, that’s just not enough for some. We’ve all launched a cruise missile in Modern Warfare only to miss absolutely everyone on the map and quit the lobby in shame (haven’t we?) and that kind of inaccuracy just won’t do in real life when you’re dealing with a $2 million warhead.

Detonating themselves in a blaze of Geneva Convention-defying glory.

Instead, we’ve turned to machines to do the dirty work for us. Lethal autonomous weapons systems allow us to completely detach from the act of butchering one another over land and oil, or mean tweets from opposing heads of state. Self-piloting, auto-targeting, remorselessly engaging drones fly their way into warzones to indiscriminately mow down anyone who looks like they’re not of “our ilk.”

The most prevalent of which is likely to be the STM Kargu (though there’s no way of knowing for sure), the company that makes these autonomously piloted threats refers to them as a loitering munition. Though, everyone else seems to prefer the more accurate name of “suicide drones.” The drones are released in swarms, equipped with facial recognition systems, where they will freely hunt down targets before dive-bombing into them and detonating themselves in a blaze of Geneva Convention-defying glory.

3. Generative blackmail material

The bar for entry into this criminal enterprise is so low that a few public social media photos will suffice.

Fake images aren’t anything new. Skilled users have been fooling people with convincing Photoshops for decades. However, we all of a sudden find ourselves in a position where it takes no skill at all to get even better results. It’s not just pictures either, but video, handwriting, and even voices. If we look at the tech behind the Vision Pro’s “Spatial Personas” then it’s not too hard to imagine somebody wearing your digital skin in the near future and causing you all sorts of grief.

Of course, it’s not that hard to imagine, because it’s already happening. Recently, the FBI had to warn the public about the dangers of new extortion methods enabled by AI software that give ne’er do wells the opportunity to create fake, misleading, or compromised images and videos of people. Worse still, it doesn’t take a Talented Mr. Ripley to achieve these results, the bar for entry into this criminal enterprise is so low that a few public social media photos will suffice, or a handful of seconds from a public YouTube video.

We now live in a post-truth world. You’ll no longer be able to trust anything you can’t see with your own eyes or touch with your own hands.

Online Deepfakery is so prevalent that certain companies refuse to place tools online once developed out of fear of what will be done with them. Most recently, Facebook owner Meta took a similar path after announcing the most powerful text-to-speech AI generation software developed so far — VoiceBox. Meta deemed the technology too unethical for wide release, knowing full well how quickly it would be exploited. Yet like lambs to the slaughter, we still research, fund, and develop these tools of mass social destruction.

Not that it matters, scammers have already found ways of doing it themselves. Deepfake phone calls to friends and family members asking for money or personal information are on the rise — leading me to firmly believe we now live in a post-truth world. You’ll no longer be able to trust anything you can’t see with your own eyes or touch with your own hands. All because there’s a chance somebody might be able to steal $50 from your granny or bribe you into paying a $100 ransom or they’ll send fictitious photos to your friends and family.

4. Crafting spAIware

There are no confirmed reports of any AI-generated malware or spyware out in the wilds of the internet — but give it time.

There’s been a lot of buzz in security circles about the threat posed by AI-generated malware or spyware as of late. It’s an issue giving security analysts sleepless nights, with many believing it’s only a matter of time before our ability to defend against cyber attacks is rendered virtually nil. Now I think about it, maybe “buzz” isn’t the right word to use. But then again, I don’t know how to spell the onomatopoeia for alarm bells blaring and people screaming in fear.

As of yet, there are no confirmed reports of any AI-generated malware or spyware out in the wilds of the internet — but give it time. Speaking with Infosecurity, CEO of security analyst firm WithSecure, Juhani Hintikka, confirmed her company had witnessed numerous samples of malware that had been freely generated by ChatGPT. As if this wasn’t worrying enough, Hintikka also pointed out how ChatGPT’s ability to vary its generations could lead to mutations and more “polymorphic” malware that make it even harder for defenders to detect.

It’s only a matter of time before the dam bursts and AI ravishes our internet safety.

WithSecure’s head of threat intelligence, Tim West, pointed out the main issue at hand “ChatGPT will support software engineering for good and bad.” On the ease of access for those seeking to do harm West would also state that OpenAI’s chatbot “lowers the barrier for entry for the threat actors to develop malware.” Previously, threat actors would need to invest considerable amounts of time into the malicious code they generate. Now, anybody can theoretically use ChatGPT to generate malicious code. Meaning the number of threat actors and generated threats could escalate exponentially.

It’s only a matter of time before the dam bursts and AI ravishes our internet safety. While we can use AI to fight back, we’d just be swimming against a tide as there’s an untold number of threats heading in our direction from potential scenarios like the one pointed out by WithSecure. At the minute, all we can do is wait for the seemingly inevitable onslaught to arrive. That’s nice to think about.

5. Predictive policing

The algorithm can reportedly predict crimes up to a week in advance with ~90% accuracy.

At this point, you’re probably assuming I ran out of things to point to after four and decided to rip off Minority Report — but I haven’t. Sadly, this too is also actually happening. Law enforcement agencies around the world are currently chasing the tantalizing prospect of ending crimes before they begin, making use of algorithms in an attempt to predict where crime is likely to happen and making sure their presence is felt in these areas to dissuade would-be criminals.

But can you truly predict crime? The University of Chicago believes so. They’ve developed a new algorithm that forecasts crime through the use of patterns in time and geographic locations. The algorithm can reportedly predict crimes up to a week in advance with ~90% accuracy.

A history of police bias in places like the United States could result in incorrect predictions, racial profiling of the innocent, and an increased police presence in communities of color.

That doesn’t sound all that bad, why is this making it to the list? Less crime is a good thing, who could argue against that? Well, how about the people of color who routinely appear to be targeted by these algorithms for one? After all, an algorithm is just a way of calculating something and is only as good as the data that is fed into it.

A history of police bias in places like the United States could result in incorrect predictions, racial profiling of the innocent, and an increased police presence in communities of color. An increased police presence by its very nature leads to a higher rate of policing, which further skews the data and leads to a further predictive bias against these communities and an escalating police presence that starts the entire process over and over again.

Have you seen the film Two Distant Strangers? Predictive policing might as well be its prequel.

Outlook

So there you have it, five unsettling things AI is currently being used for online and around the world. Are you still feeling good about the AI revolution? Or have your eyes been opened to the potential threats we now face as a society?

Maybe these things don’t phase you much, maybe you think that a future in which killer police drones circle neighborhoods 24/7 due to a mysterious algorithm identifying likely future rabble-rousers as it scans you through the walls of your homes, piggybacking on spyware-riddled Wi-Fi radiowaves, waiting for anyone to make one wrong move will lead to a better and brighter society.

Who knows? Not me. All I know is that the future is looking pretty horrifying right about now.

Back to Ultrabook Laptops

Source link

notebook.co.id informasi dan review notebook laptop tablet dan pc

notebook.co.id informasi dan review notebook laptop tablet dan pc